|

CV | Google Scholar | Github Hello, I am a PhD student in Computer Science at University of Maryland, College Park, advised by Ming C. Lin. My research goal is to develop techniques for applying machine learning to geometric problems in computer graphics, to enable new ways for artists and engineers to create. Research interests include: animation and simulation, geometry processing, and geometric deep learning. I have previously interned at Roblox and Google. Prior to that, I worked as a software engineer at Amazon. I completed my MS in Computer Science at NYU, and BA in Film Production at USC School of Cinematic Arts. Don't hesitate to reach out at: gaoalexander [at] gmail dot com |

|

|

|

|

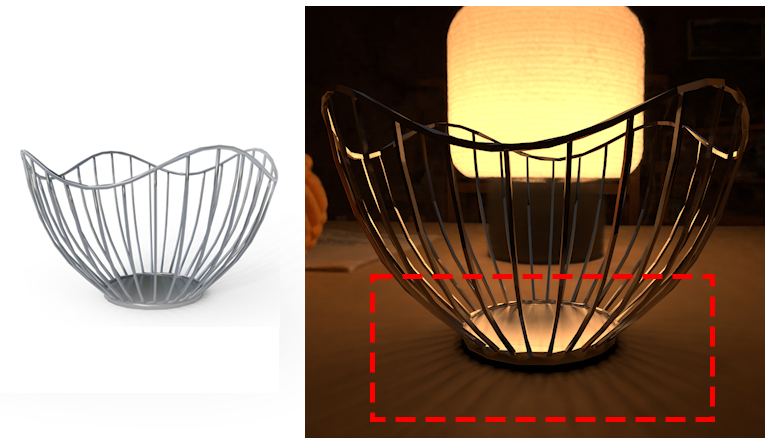

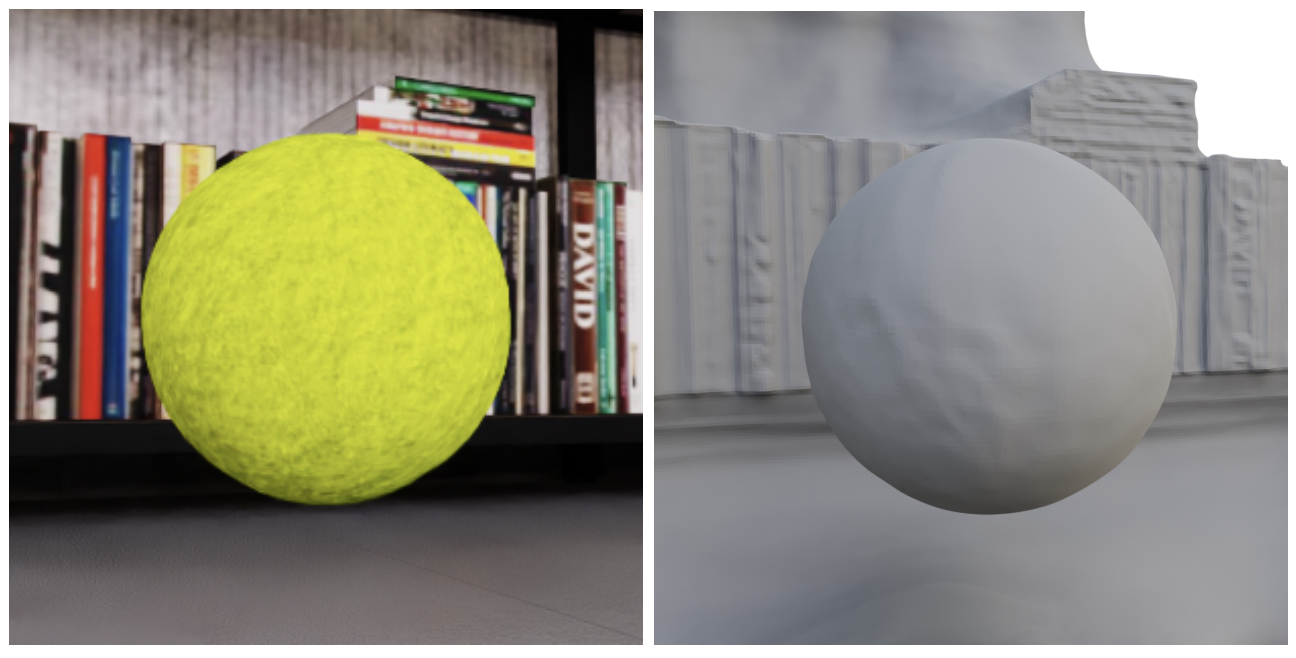

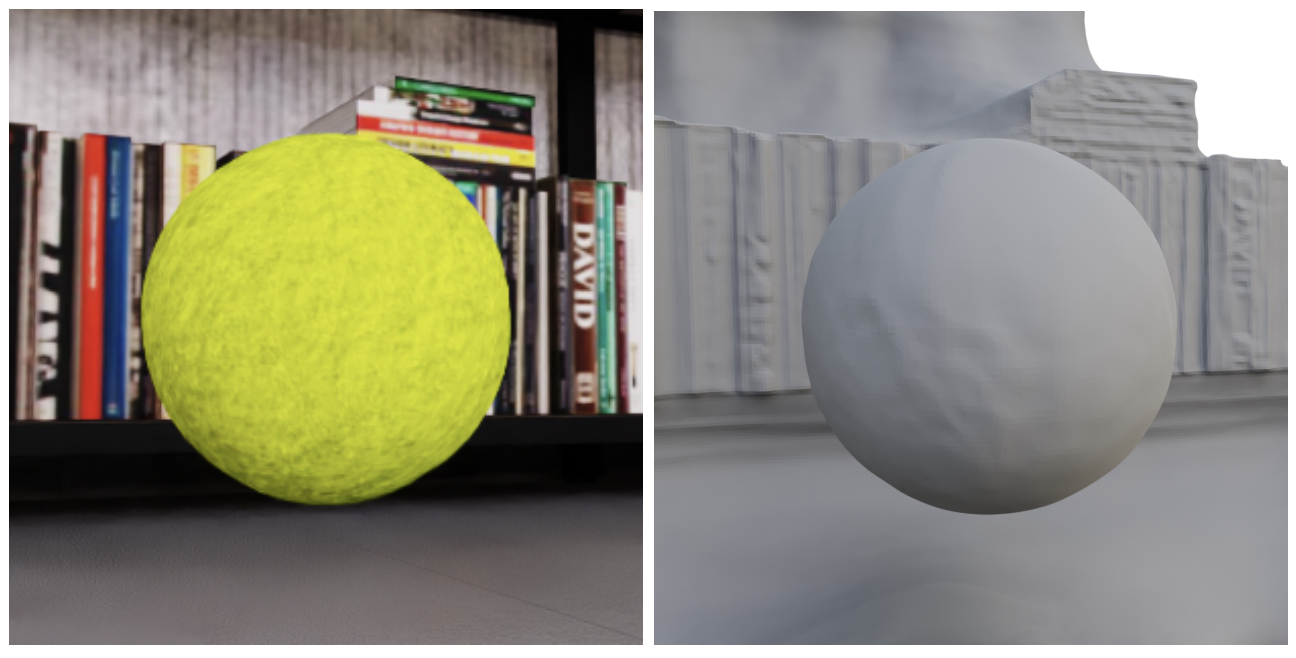

International Conference on Computer Vision (ICCV), 2023 Alexander Gao*, Yi-Ling Qiao*, Yiran Xu, Yue Feng, Jia-Bin Huang, Ming C. Lin Paper / Code / Website |

|

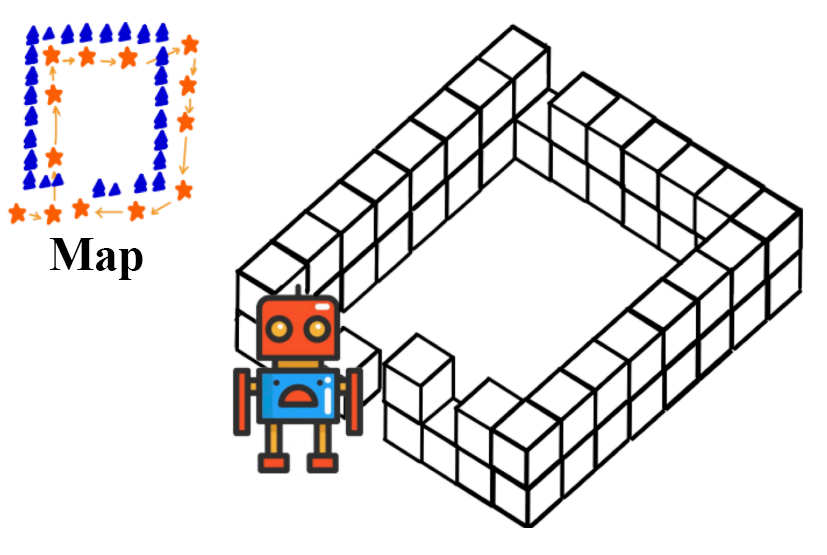

International Conference on Learning Representations (ICLR), 2023 Wenyu Han, Haoran Wu, Eisuke Hirota, Alexander Gao, Lerrel Pinto, Ludovic Righetti, and Chen Feng. Paper / Code / Website |

|

Conference on Neural Information Processing Systems (NeurIPS), 2022 Alexander Gao*, Yi-Ling Qiao*, and Ming C. Lin. Paper / Code / Website |

|

Reviewer: ICML (2024), SIGGRAPH (2024), IEEE RA-L (2024), ICLR (2024), NeurIPS (2023) |

|

This site is based on Jon Barron's template. |